All models are wrong, but some are useful.

—George E. P. Box

Model-Based Systems Engineering

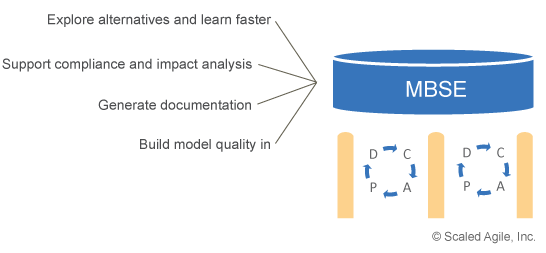

Model-Based Systems Engineering (MBSE) is the practice of developing a set of related system models that help define, design, and document a system under development. These models provide an efficient way to explore, update, and communicate system aspects to stakeholders, while significantly reducing or eliminating dependence on traditional documents.MBSE is the application of modeling systems as a cost-effective way to explore and document system characteristics. By testing and validating system characteristics early, models facilitate timely learning of properties and behaviors, enabling fast feedback on requirements and design decisions.

MBSE historically focused on expressing and recording requirements, design, analysis, and verification information [1]. As modeling technology matures, it provides even more value by accelerating learning (e.g., simulation) and provides better insights into the physical world (e.g., digital twins) [2]. Both are important to evolve live systems and enable Enterprise Solution Delivery.

Although models are not a perfect representation of a system, they provide knowledge and feedback sooner and more cost-effectively than implementation alone. And they allow simulation of complex system and system-of-systems interactions with appropriate fidelity to accelerate learning. In practice, engineers use models to gain knowledge and to serve as a guide for system implementation. In some cases, they use them to directly build the actual implementation (e.g. electrical CAD, mechanical CAD).

Details

Lean practices support fast learning through a continuous flow of development work to gain fast feedback on decisions. MBSE is a discipline and a Lean tool that allows engineers to quickly and incrementally learn about the system under development before the cost of change gets too high.

Models are used to explore the structure, behavior, and operational characteristics of system elements, evaluate design alternatives, and validate assumptions faster and earlier in the system life cycle. This is particularly useful for large and complex systems—satellites, aircraft, medical systems, and the like—where the solution must be proven practical beyond all possible doubt before, for example, launching into space or connecting to the first patient. Models also record and communicate decisions that will be useful to others. This information serves as documentation for Compliance, impact analysis, and other needs. In SAFe, model information is recorded as part of the Solution Intent, most often created by the work of Enablers.

The following sections provide guidance on adopting MBSE.

Explore Alternatives and Learn Faster

Modeling supports fast learning cycles (see SAFe Principle #4 – Build incrementally with fast, integrated learning cycles) and helps mitigate risks early in the product life cycle. Models facilitate early learning by testing and validating specific system characteristics, properties, or behaviors, enabling fast feedback on design decisions.

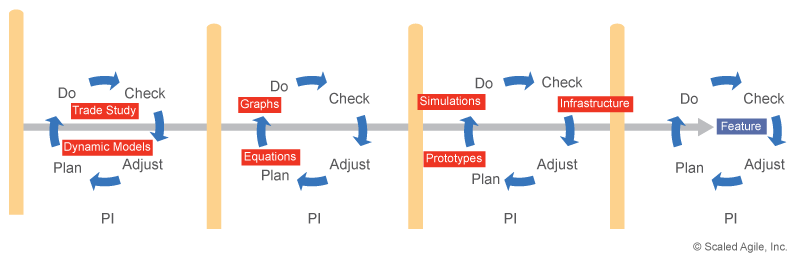

Dynamic, solid, graphs, equations, simulation, and prototypes—models come in many forms. As Figure 2 illustrates, each provides a different perspective into one or more system characteristics that enable the creation of future Capabilities and Features.

Models may predict performance (response time, reliability) or physical properties (heat, radiation, strength). Or they may explore design alternatives for user experience or response to an external stimulus. But models aren’t the only approach to exploring design alternatives. Design Thinking and user-centered design are synergistic with MBSE and also help validate assumptions sooner.

Bridge Physical and Virtual Worlds

Digital twin technology supports MBSE. A digital twin is a virtual instance of a physical system synchronized through the physical twin’s operational data such as performance, maintenance, and health. Integrating the physical and virtual worlds validates virtual models and helps engineers improve system analysis, better predict failures or downtime, and provide for more accurate maintenance schedules.

Digital twins support business agility by better predicting when future enhancements and product upgrades will be necessary to make Solution Roadmaps more accurate. And they can uncover new business opportunities by making learning, faster, cheaper, and more reliable. See [3] for an overview of digital twins.

Support Compliance and Impact Analysis

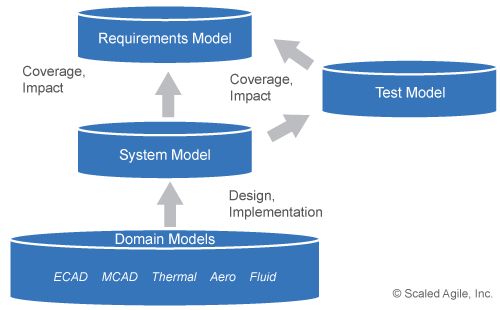

Historically, system decisions for requirements, designs, tests, interfaces, allocations, and others are maintained in a variety of sources, including documents, spreadsheets, domain-specific tools, and sometimes even on paper. MBSE takes a holistic, system approach to manage system information and data relationships, treating all information as a model. Figure 3 shows a generic structure linking information from multiple types of models.

Traceability helps to quickly and reliably understand the impact of changes to the system, or the impact of a change at the domain level on other parts of the system and requirements. For example, teams and System Architect/Engineers use model information to support the Epic review process. Traceability also provides the objective evidence needed to address many regulatory and contractual compliance concerns. And traceability can integrate digital twins into a digital thread to provide connectivity across the systems lifecycle.

A Lean, continuous-change environment amplifies the need for related models. While manual solutions to manage related information for coverage and compliance may suffice in a phase-gate process, they will be quickly overwhelmed in an Agile environment that encourages frequent and constant change.

Generate Documentation for Compliance

Many product domains require documents for regulatory compliance (e.g., FAA, FDA) or contractual obligations (e.g., CDRLs in government contracting). With an MBSE-approach to system development, models will contain most, if not all, of the information required for compliance and can be used to generate the objective evidence necessary for compliance. Models act as a single source of truth and ensure consistency across the many documents. Also, models can create documents targeting different stakeholders, who may have individual system perspectives, or who only should only have access to view subsets of the information (e.g., Suppliers).

While all products and programs will likely require formal documents, System Engineers are encouraged to work directly with Customers and/or regulatory agencies on the minimum set sufficient to meet their obligations. The source of most, if not all, of the information, resides in engineering models that can and should be used, where possible, for inspections and formal reviews.

Build Model Quality In

Due to the diversity and number of people contributing information, models can suffer a challenge: continuous changes made by many people can cause a drop in quality without proper oversight. Members of System Architect/Engineering works with teams to define quality practices—model standards and model testing—and to ensure that they are followed.

The quality practices discussed below facilitate early learning cycles. As SAFe notes, “You can’t scale crappy code,” and the same is true for system models. Quality practices and strong version management allow engineers to confidently and frequently make model changes and contribute to the system’s intent.

Model Standards

Model standards help control quality and guide teams on how best to model. They may include:

- What information should be captured (including information necessary for compliance)

- Modeling notations (e.g., SysML) and parts of those notations (e.g., use case) to use or exclude

- Where modeling information should be placed for solution and subsystem elements

- Meta-information that should be stored with different types of model elements

- Links within the model or with other cross-discipline models

- Common types and dimensions used across the system

- Modeling tool properties and configuration

- Collaboration practices and proper use of any version control system(s)

If documents are being generated from the models, the document templates should be defined early, as they will influence many of these decisions. System designers need to know where to store the model elements and any metadata or links that may be used for queries, document generation, or compliance. As a best practice, create a high-level, full-system skeleton model early to validate these usage scenarios. Teams then populate the model as the system evolves.

Create Testable and Executable Models

SAFe Test-First practices help teams build quality into their products early, facilitating the continuous small changes we find in Agile software development. Test-first creates a rich suite of cases that allow developers to more reliably make changes without causing errors elsewhere in the system. Rich, automated tests are critical to creating a Continuous Delivery Pipeline.

Lean practices encourage testable, executable models (when feasible) to reduce the waste associated with downstream errors. Models should be testable against whatever assessment criteria exist for the domain or discipline:

- Mechanical models test for physical and environmental issues

- Electrical models test for logic

- Software models test for anomalies

- Executable system models test for system behavior

Most tools provide the ability to check models or to create scripts that can iterate across the models and identify anomalies.

Testing requirements models. Textual requirements are used in almost every system and, under the current practice, are typically reviewed manually. The Agile practice of Behavior-Driven Development (BDD) defines automated acceptance tests for Features and Stories. These tests persist and continually validate the solution as it emerges. While useful for testing agile backlog items, BDD’s use has been limited at a large scale. However, the recommendation to automate where possible and make requirements and tests one and the same is a useful goal.

Testing analysis and design models. Designs represented in models can be tested using tools that have static analyzers or ‘checkers’ that identify something that deviates from what is standard, normal, or expected. Teams may add their own rules—model organization, modeling conventions and standards, required meta-information, etc. If analyzers don’t exist, scripts can iterate over the models to look for problems in the static model.

Models can also be tested dynamically. The models from engineering disciplines have their own solutions for assessing the quality and should be leveraged as part of the testing practice.

Testing traceability. To ensure proper queries, document generation, and compliance, models must comply with the linking structure.

Document generation. While possibly redundant with the traceability scripts above, document generation may have scripts to ensure that the model is structured properly and that all data exists to support all document templates. With large models, it’s often easier to debug a script than a document template.

Learn More

[1] INCOSE Systems Engineering Handbook. 2019. https://www.sebokwiki.org/wiki/INCOSE_Systems_Engineering_Handbook [2] Leveraging Digital Twin Technology in Model-Based Systems Engineering. 2019. https://www.mdpi.com/2079-8954/7/1/7/pdf [3] The Digital Transformation of the Product Management Process: Conception of Digital Twin Impacts for the Different Stages

Last update: 10 February 2021